Two weeks ago I wrote about how a new model from Google addresses some classic AI image fails by natively generating images rather than handing off to a separate AI image generation model.

Two days ago OpenAI rolled out a similar capability to ChatGPT. Rather than handing off image generation requests to DALL-E, ChatGPT now uses its default multimodal model (GPT-4o) to generate images.

It delivers a major step change in image quality, text rendering and prompt-adherence compared with DALL-E 3, which was released way back in October 2023 and I awarded just 2.5 stars in my December 2024 comparison of AI image generators.

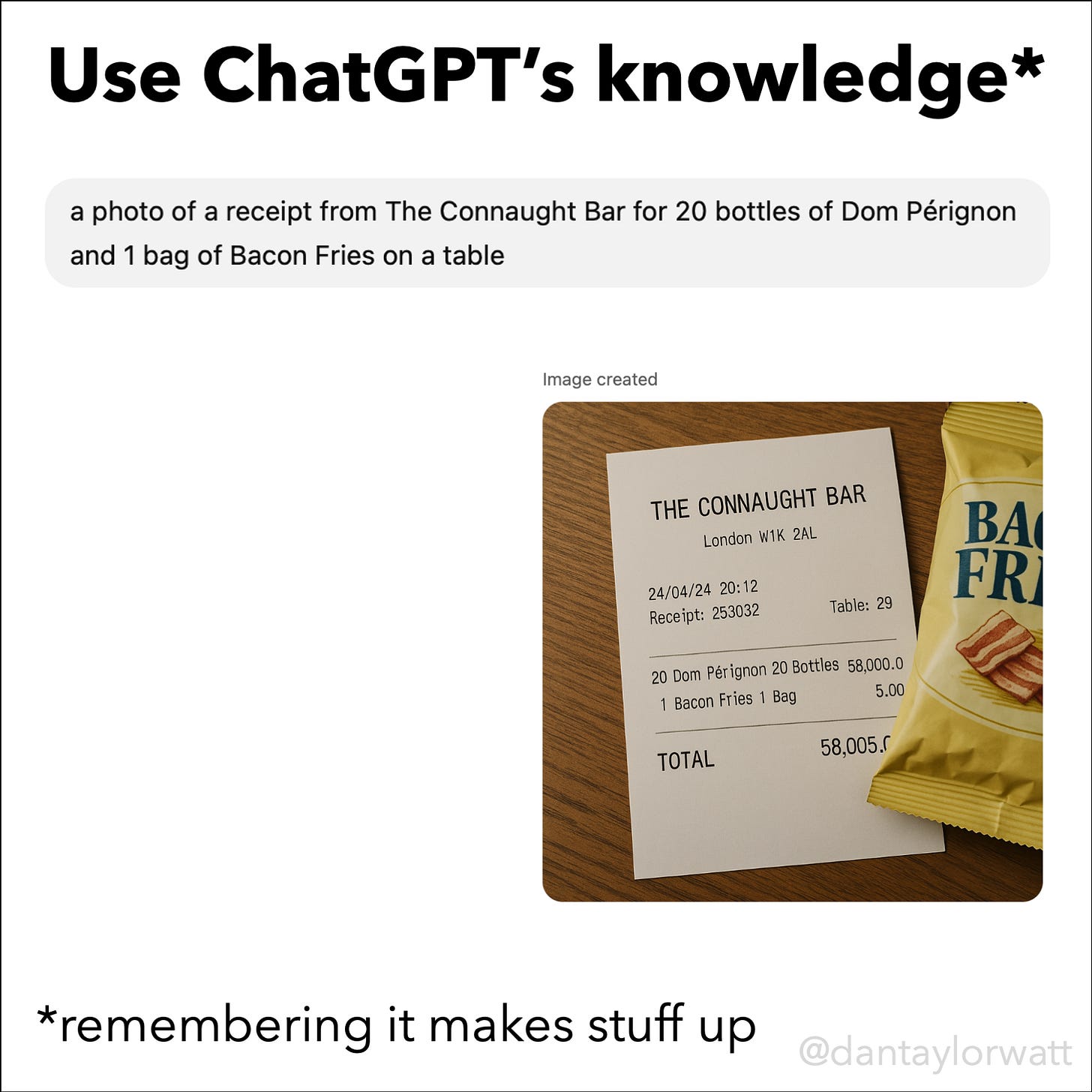

However, as with Google’s new model (which isn’t yet plumbed into the Gemini website or app), GPT-4o is also now able to bring its other skills to bear to image generation.

One of its most useful? Understanding plain English.

Whilst AI image generators have long since offered the ability to refine generated images using a combination of highlighting tools and re-prompting, being able to ask for refinements using natural language in the main chat interface makes this capability more accessible.

The ability to edit and combine existing images is also not new, but is going to be used by a lot more people now.

Here are 8 things to try:

It’s also pretty good at maintaining consistent characters/scenes across multiple generations and creating infographics, although you may have to regenerate some elements using its Select tool (accessible via by clicking/tapping a generated image).

Limitations

It’s not the quickest model and generated images are only 1024 pixels, with no option to upscale.

It also suffers from most of the same limitations as other state of the art AI image generators, although the ability to refine using natural language makes the revision process feel less arduous.

Safeguards

Some safeguards are in place around removing watermarks from images (which caught Google out last week) and generating photos of real people (I was relieved when it aborted its attempt to dress me in an Olaf costume). However, there’s still clearly scope for misuse and OpenAI are likely to be closing loopholes for a few weeks.

Whilst professionals wanting high levels of control and finesse may not be canceling their Midjourney subscriptions just yet, the power of using a multimodal modal to refine images is now very evident and it’s only going to get better.