What do ‘reasoning’ and ‘deep research’ AI models mean in practice?

In the beginning1 was ChatGPT.

Now there’s a bewildering number of different AI assistants and models available, with more launching every week.

There’s also the advent of two new approaches to make sense of:

Reasoning, where the model spends more time working through different approaches before responding.

Deep research, where the model spends more time finding and analysing relevant data before generating in-depth reports with citations.

Much of the coverage of these new approaches has focused on their impact on AI models’ performance against various science, maths and coding benchmarks.

But what do they mean in practice?

Here’s a quick explainer.

Reasoning

OpenAI was first to deploy a dedicated reasoning model with o1, which was made available to ChatGPT’s paying subscribers last September.

It differed from previous models in a couple of key respects:

It codified chain-of-thought prompting (breaking problems down into smaller sequential steps before generating a response).

It increased the amount of computational resource used when generating a response (known as test-time compute).

Google previewed its reasoning model, Gemini 2.0 Flash Thinking Experimental, in December, which added a third differentiator over previous models (which OpenAI subsequently adopted):

It shared its ‘thought process’.

However, it was the release of DeepSeek-R1 in January which ignited more mainstream interest in reasoning models as it was freely available, more efficiently trained and open sourced so folk could download and modify it.

Reasoning models proved instantly useful to mathematicians and software developers wanting more considered calculations and code suggestions and more transparency over how responses were arrived at.

The benefits for the rest of us are a bit less obvious. I’d summarise them thus:

Fewer made-up facts

Better at following multi-step instructions

Better at explaining things clearly

Less likely to double-down on a wrong approach

When they do go off track, it’s easier to see how and why

Reasoning models aren’t always the right tool for the job and at the moment, the onus is on the user to know which model to select for different tasks.

One of my AI predictions for 2025 is that AI assistants will get better at automatically selecting the right model for the task in hand (see prediction #10).

Claude has already released a hybrid model, enabling you to toggle between Normal and Extended thinking modes (rather than selecting an entirely different model). Meanwhile, OpenAI’s CEO, Sam Altman, has stated “a top goal for us is…creating systems that can use all our tools, know when to think for a long time or not, and generally be useful for a very wide range of tasks”.

Another watch out with reasoning models/modes is that their willingness to try different approaches can lead them to invest a significant amount of test-time compute in trying to solve intractable problems. Below is the output of the 190 seconds DeepSeek-R1 spent trying to devise and then solve a more complex version of the river crossing puzzle (spoiler: it’s proposed approach doesn’t work).

I find the vast majority of my daily AI interactions are well served by the default model/modes of ChatGPT, Claude and Perplexity and it’s only when I’m disappointed by their responses to knottier questions that I turn to reasoning models/modes.

Deep Research

Google debuted its snappily-named Gemini 1.5 Pro with Deep Research last December. ChatGPT launched its lower-case deep research last month. Both operate in a similar fashion, sharing a research plan with the user for input before conducting in-depth, multi-step research on the internet and generating an in-depth report with citations.

Here’s an example of me asking ChatGPT deep research to explore the impact of AI on wellbeing:

It started by asking me to clarify which aspects I’m interested in. I responded “all of the above” and it got to work. 6 minutes later, it had reviewed 43 sources and compiled a 3,000 word report.

Timothy B. Lee has a good round up of reflections from different domain experts trying out ChatGPT deep research, including “An antitrust lawyer [who] told me an 8,000-word report “compares favorably with an entry-level attorney” and that it would take 15 to 20 hours for a human researcher to compile the same information”.

Writing teacher, David Perell, posted a note opining that “The amount of expertise required to out-do an LLM is rising fast. For example, the quality of a well-prompted, ChatGPT Deep Research report is already higher than what I can produce in a day's worth of work on almost any subject” and positing what kinds of non-fiction writing will endure (writing informed by personal experience and/or offering a unique perspective).

The big watch out with deep research models/modes, is that they still make stuff up and the presentation format (typically a long, structured document with lots of citations) can a) lull us into a false sense of security regarding their rigour b) make it harder to spot fabrications.

I’d still advise only using deep research when you have enough knowledge of the topic to be able to spot any egregious errors (or where a few errors aren’t material). I’d also always treat it as input/stimulus rather than as a finished document to be shared with the world.

Where can I try out these models/modes?

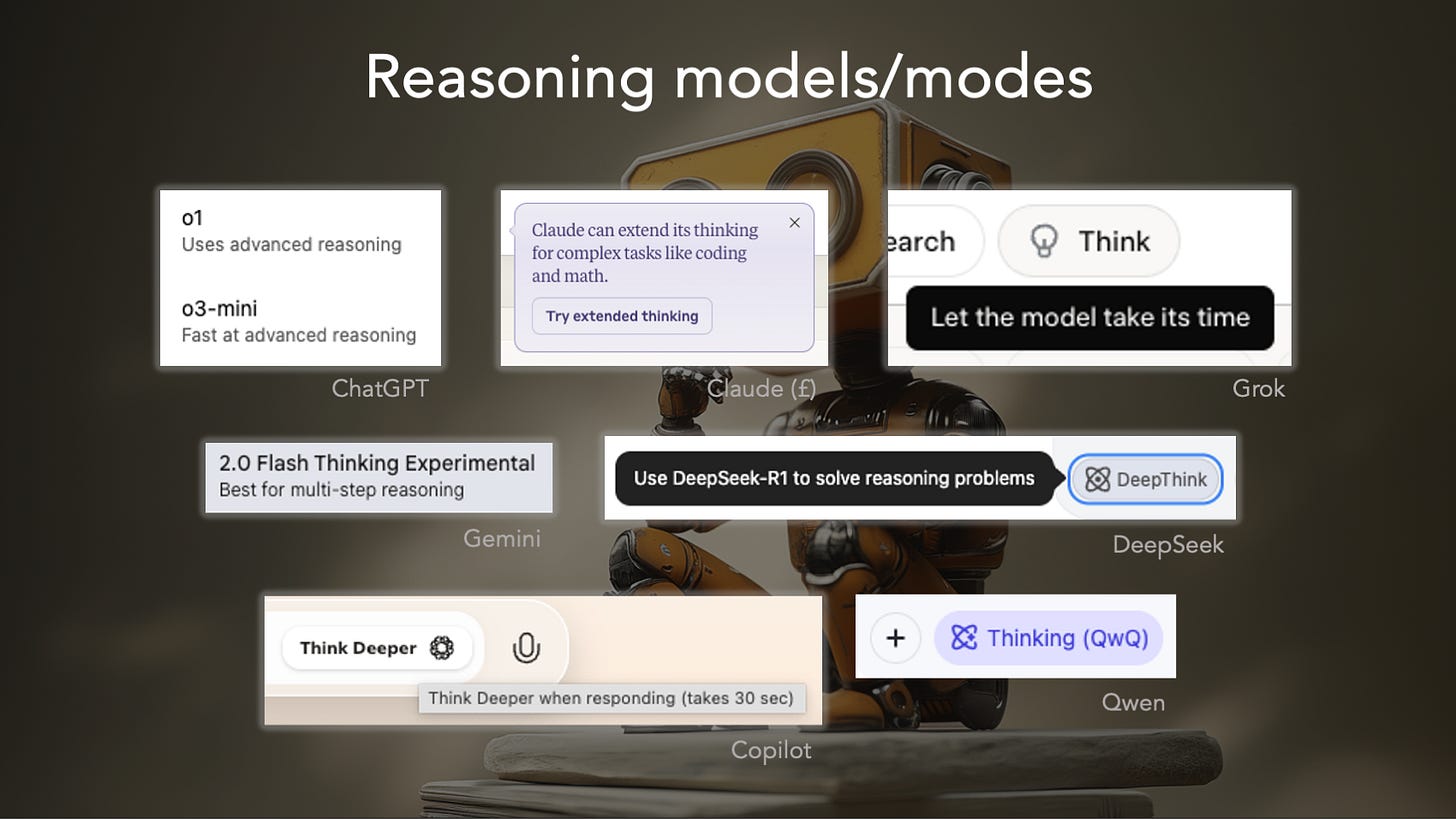

The main readily available reasoning models are:

OpenAI o1 (free via Microsoft Copilot’s Think Deeper, else requires $20 ChatGPT Plus subscription)

OpenAI o3-mini (5 free queries a day via Perplexity, else requires $20 ChatGPT Plus subscription)

Gemini 2.0 Flash Thinking (free via Google Gemini)

DeepSeek-R1 (free, although best accessed via Perplexity, which avoids sending your data to China)

Claude Sonnet 3.7 Extended (requires $20 Claude Pro subscription)

Grok 3 Think & Big Brain (currently free for a limited period via Grok)

QwQ-32B & Qwen2.5-Max (free via Qwen Chat, although data sent to China)

My recommendation would be to head to Perplexity and give both OpenAI o3-mini and DeepSeek-R1 a go. If it’s a capability you want on an ongoing basis I’d recommend forking out for Claude Pro or ChatGPT Plus.

The main readily available deep research models are:

Gemini 1.5 Pro with Deep Research (requires £20pm Google One subscription)

ChatGPT deep research (requires paid ChatGPT subscription. $20pm Plus subscribers get 10 queries per month, $200pm Pro subscribers get 120 per month)

Perplexity Deep Research (up to 5 free queries per day, $20pm Plus subscribers get 500 queries per day)

Grok Deep Search (up to 5 free queries every 2 hours, $40pm X Premium+ subscribers get 50 queries per day)

My feelings about Elon Musk preclude me from recommending Grok, so I would suggest giving Perplexity Deep Research a go. However, if you really want to see what deep research models are capable of, I’d stump up the $20 access to ChatGPT deep research.

Whilst reasoning generally raises the bar for the quality of AI models’ responses to more complex prompts, deep research opens up whole new use cases.

The beginning of mass-adoption AI assistants, that is. Only Sam Altman thinks God created ChatGPT 😉