There’s no shortage of things to worry about in relation to AI: bias, hallucination, environmental impact, job displacement, abuse by malicious actors.

Today I’d like to focus on the impact of a smaller, more banal issue: AI’s propensity for verbosity.

More specifically, AI assistants powered by Large Language Models (LLMs), which are becoming more popular as standalone products (ChatGPT passed 400m weekly active users in Feb) and are increasingly being woven into the software packages and operating systems billions of us use every day.

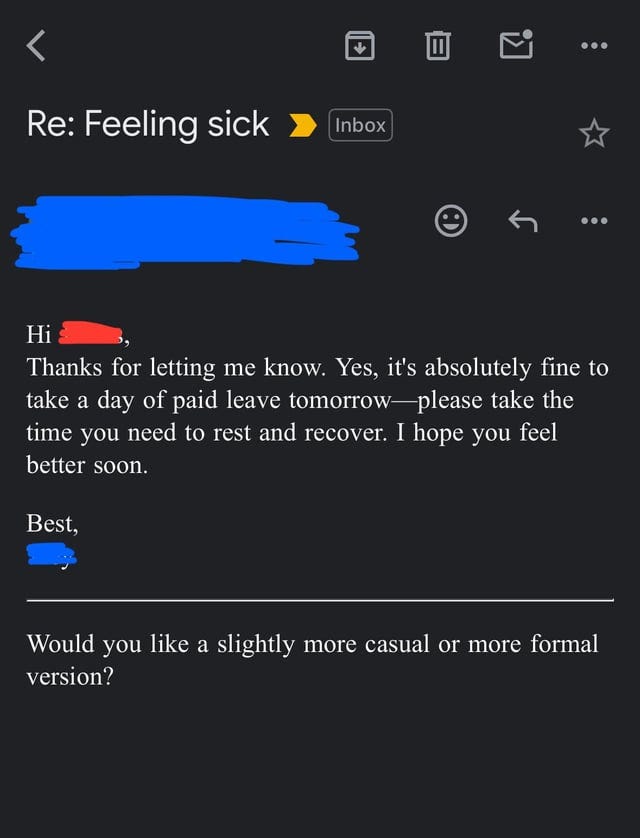

And it’s resulting in me receiving longer emails 😠

Why so many words?

Short answer: a combination of factors.

Longer answer:

LLMs are trained on huge corpuses of text, a significant proportion of which are academic papers, textbooks, encyclopedias and educational websites, where comprehensive explanations are often favoured over more concise ones. This can get amplified during reinforcement learning from human feedback (RLHF), where human evaluators may favour responses that are more thorough, explanatory and - yes - long.

In addition, LLMs have what’s known as a ‘system prompt’ - a set of instructions input by the developer which aim to guide the LLMs responses. Alongside instructions not to do certain things (e.g. defame, provide bomb-making instructions), these system prompts typically instruct the LLM to be helpful and engaging and to provide caveats/disclaimers, which can lead to longer responses.

Whilst some AI companies try to keep their system prompts under wraps, others proactively publish them. Anthropic, maker of my preferred AI assistant, Claude, publishes its system prompts here.

It’s worth having a read of the full prompt for its current default model (Claude 3.7 Sonnet) as it reveals a lot about how LLMs operate (and why Claude feels quite different to ChatGPT).

After informing the assistant of its name, developer and today’s date, the system prompt sets about defining its personality: “Claude enjoys helping humans and sees its role as an intelligent and kind assistant to the people, with depth and wisdom that makes it more than a mere tool.”

It gives permission for Claude to take the conversation in new directions: “Claude can suggest topics, take the conversation in new directions, offer observations, or illustrate points with its own thought experiments or concrete examples, just as a human would”.

These two instructions have the potential to amplify LLMs tendency towards verbosity, so it’s not surprising that Claude’s system prompt also includes multiple instructions designed to stop Claude prattling on:

“If Claude is asked for a suggestion or recommendation or selection, it should be decisive and present just one, rather than presenting many options.”

“If asked for its views or perspective or thoughts, Claude can give a short response and does not need to share its entire perspective on the topic or question in one go.”

“Claude provides the shortest answer it can to the person’s message.”

Those instructions aren’t necessarily enough to counter LLM’s propensity for banging on.

Meanwhile, the advent of ‘reasoning’ and ’deep research’ models/modes has increased the word count of LLMs responses by an order of magnitude.

Perplexity’s Deep Research system prompt goes as far as to explicitly instruct the LLM to “prioritise verbosity”(!)

Why is it a problem?

The human effort involved in writing has historically moderated the volume of written text one person can generate. Typing and dictation made it easier to get more words down on paper. The advent of generative AI has removed that moderating factor altogether.

A single prompt can result in tens of thousands of words being rendered in an original configuration. Which isn’t necessarily a problem in and of itself. Sometimes a lot of words are needed to unpack a topic.

The problem comes when that astonishing word-generating ability, coupled with LLM’s tendency towards verbosity, is directed back at humans without due care, particularly in situations where less is very definitely more.

I’m talking about emails, LinkedIn posts, Airbnb listings, eBay descriptions. Places where the reader is likely working their way through lots of items and just requires the most salient information communicated succinctly.

Whilst still time-consuming to read, a long, tailored email once signaled that the sender had taken time and care. It now potentially creates the inverse impression - that the sender isn’t valuing the reader’s time sufficiently to have just communicated the key information. A modern spin on Blaise Pascal’s “I only made this letter longer because I didn't have the time to make it shorter".

How to mitigate?

Whilst AI companies are best placed to tackle the AI verbosity problem upstream through training, RLHF and system prompts, there are a few things you can do as a user:

Add Custom Instructions

In addition to system prompts, most AI assistants now include a way for the user to provide instructions they want the model to follow across chats.

In ChatGPT this is via Settings > Personalization > Custom Instructions

In Claude it’s under Settings > Profile > Preferences

In Gemini it’s under Settings > Saved Info

Copilot and Grok don’t currently offer this functionality although the copycat nature of AI chatbot development means it’s almost certainly in the pipeline.

My first and most treasured ChatGPT Custom Instruction? ‘Be succinct’.

Ask AI to make your text shorter, not longer

An excellent rule of thumb from Laurie Voss (via Simon Willison):

“Is what you're doing taking a large amount of text and asking the LLM to convert it into a smaller amount of text? Then it's probably going to be great at it. If you're asking it to convert into a roughly equal amount of text it will be so-so. If you're asking it to create more text than you gave it, forget about it.”

Don’t click the ‘Elaborate’ button

Let’s just all agree to write shorter emails, yes?

Do you have any insight into whether asking an LLM to be succinct will cause it to produce less well thought through responses, or just shorter ones? Would it produce a markedly different response if you ask it to produce a short answer, or let it give it's normally verbose one and then ask it to shorten that response?