Why we’re obsessed with what AI *can’t* do

And why AI companies need to stop handing us sledgehammers to crack nuts

If I had a penny for every LinkedIn post / podcast / conference panel guest gleefully recounting AI’s failure at a given task then, well, I’d have a lot of pennies.

So why are we so obsessed with the things AI *can’t* currently do?

I’d suggest there are a few things at play here:

1.) Assuaging our fears

Generative AI is able to competently perform/assist with a bunch of creative tasks computers have historically struggled with. It’s emerged - and is evolving - very rapidly. That’s inherently threatening for many of us and wanting to reassure ourselves with the things that AI can’t do well/at all is an understandable response.

However, that reassurance can be short-lived as models continue to improve.

In my recent post on AI in video advertising creative, I embedded a few ads from this year’s Super Bowl parodying some of the common issues with early AI image generation models (extra limbs and fingers, distorted faces and malformed text). I suspect we won’t see similar ads at next year’s Super Bowl as those issues become increasingly less common/acute in newer models.

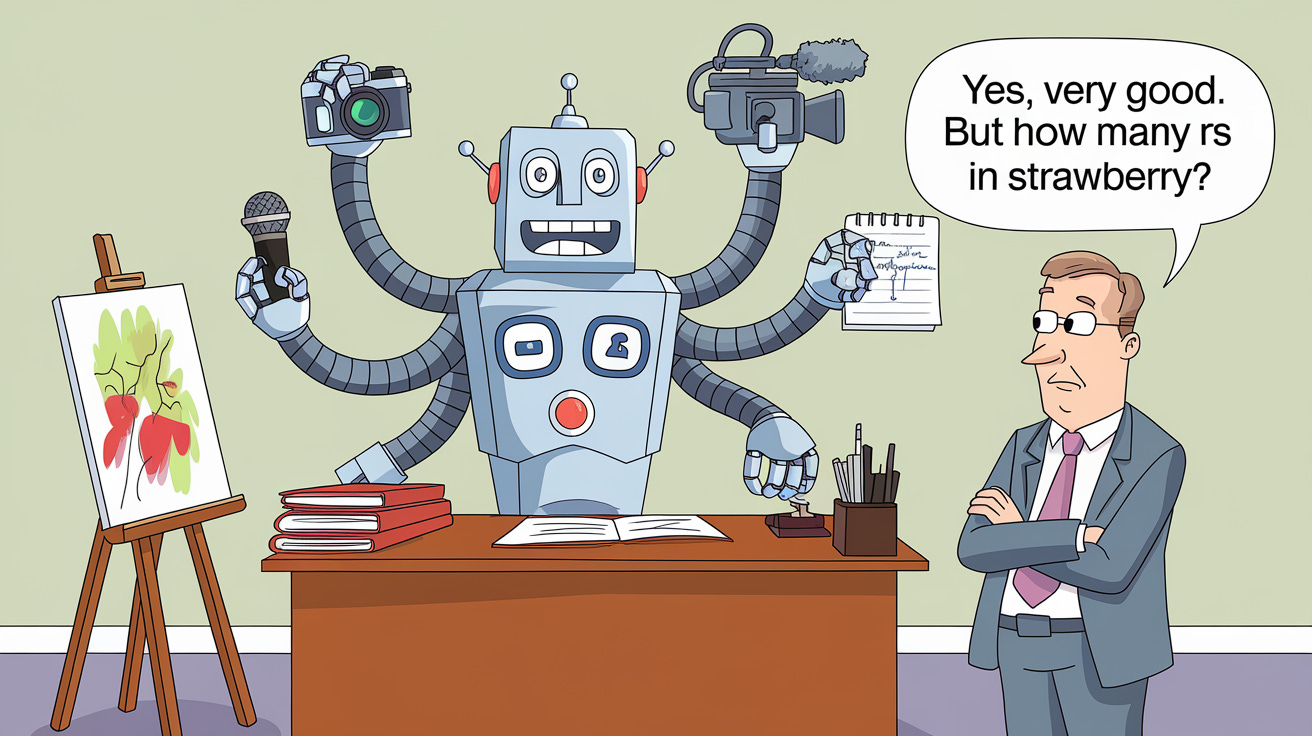

The below ad, which appeared on a building site wrap in Antwerp in June, is both funny and impactful. However, it may not ultimately age well in view of where the convergence of AI and robotics appears to be headed.

2.) Tempering the hype

Another driver is wanting to temper the hype.

Social media is awash with people declaring a given AI development “changes everything” or renders [insert name of well-established product] instantly redundant (spoiler: it doesn’t).

Meanwhile AI’s poster child, Sam Altman, has recently ratcheted his evangelical fervour up a couple of notches to try and persuade investors to part with more cash in pursuit of AGI (choice quote: “the path to the Intelligence Age is paved with compute, energy, and human will”. Translation: “give us your f***ing money”).

Pointing out what AI can’t do is arguably an important balancing measure when so much Kool-Aid is being imbibed.

3.) Trying to stop people from using sledgehammers to crack nuts

OpenAI’s most recently released model, o1, was codenamed Strawberry, as previous Large Language Models tended to struggle to identify the number of Rs in the word (due to the fact LLMs trade in tokens rather than words). Here’s GPT-4o repeatedly failing to correctly report the number of Rs in the names of various fruit, before I encouraged it to take a slower, more careful approach.

Whilst o1’s baked-in chain-of-thought approach enables it to reason its way to the right answer and show a summary of its workings (see below), it’s still not the right tool for the job.

AI companies need to invest more time and effort in developing product experiences which a) pick the right tool for the task in hand and b) admit they can’t help when they don’t have the knowledge/capability.

Users of ChatGPT shouldn’t have to make sense of this drop down:

And it shouldn’t be on the user to know not to use a power/water-hungry AI model for tasks where a dictionary/calculator/Google search would be a better/more energy-efficient option.

There are some green shoots to be found in Apple’s triage approach, handling simple queries on device and only handing off more complex queries to cloud-based models and in Perplexity reassuringly reporting that “the answer to this is unknown on the internet”.

However, these approaches are currently the exception rather than the rule.

For now we’re going to need to keep reminding ourselves and each other that generative AI models hallucinate and aren’t good at maths (although other AI models are) and be mindful about what we use them for.

On a related note, this 2-part deepdive ‘Is AI eating all the energy?’ (discovered via Duncan Geere’s newsletter) is a thoughtful counterpoint to some of the more reductive coverage of AI’s environmental impact. It’s a long and detailed read but the paragraphs Duncan pulled out in his newsletter neatly summarise some of the key points:

A LLM response takes 10-15x more energy than a Google search, sure. But does using a tool like ChatGPT as a research assistant consume more energy than searching for as long as it takes to get the same information? An LLM response just has to save you 10 clicks to break even, so as long as you’re really using it and not just asking something you could easily search yourself, it’s worth it.

The problem isn’t the tech, it’s the arms race. The tech isn’t inefficient at what it does. Individual consumer choices are not what’s impacting the environment. The cost of AI use at any normal human scale doesn’t waste a problematic amount of energy. The problem is the astronomical cost of creating AI systems massive enough to ensure corporate dominance. Many companies are all trying to develop the same products: an everything app, a digital assistant, a text/image/audio/video content generator, etc. And they’re all doing it in the hopes that theirs is the only one that people will ever use. They actively want their competitors' investment to not pay off, to become waste.

it seems like there’s a lot of misplaced anger towards individual users of AI services. Individual users are — empirically — not being irresponsible or wasteful just by using AI. It is wrong to treat AI use as a categorical moral failing, especially when there are people responsible for AI waste, and they’re not the users. The blame for these problems falls squarely on the shoulders of the people responsible for managing systems at scale. Using ChatGPT doesn’t tip that scale. You can’t get mad at people who are being responsible as reaction to other people who are not.

Interesting perspective, Dan.

When I first read this, I thought it was a fascinating topic. But after reflecting on it (as a scientist, I always like to go back to first principles), I started wondering: were the media trying to temper the AI hype, or were they more focused on reassuring people?

Since I started writing myself, I've realized that a lot of the time, it's about finding a fresh angle—something different that hasn't been covered yet, and that resonates with the audience. Maybe, in this case, it really is about offering reassurance :)