Vibe coding supertest

(or how I built an ALT text generator without knowing how to code)

I am not a software engineer. My rudimentary coding knowledge dates back to the early noughties (and there’s not much call for the <font> tag these days…)

I have led large product development teams with dozens of talented software engineers whose precious time and attention was ruthlessly prioritised on work that data analysis, research and user testing indicated would have the highest impact.

Developing exploratory prototypes and nice-to-have internal tools was always a tough thing to prioritise over improvements to the user-facing products.

The advent of vibe coding has opened up an alternative route to creating simple web apps and prototyping more complex applications. A route which doesn’t require ring-fencing time from software engineers.

I’ve written previously about using Claude’s Artifacts to create simple web tools. Released last summer, it was one of the first vibe coding platforms that didn’t require the user to have any technical knowledge.

Since then, countless other options have emerged for users without any coding knowledge wanting to create tools and prototypes.

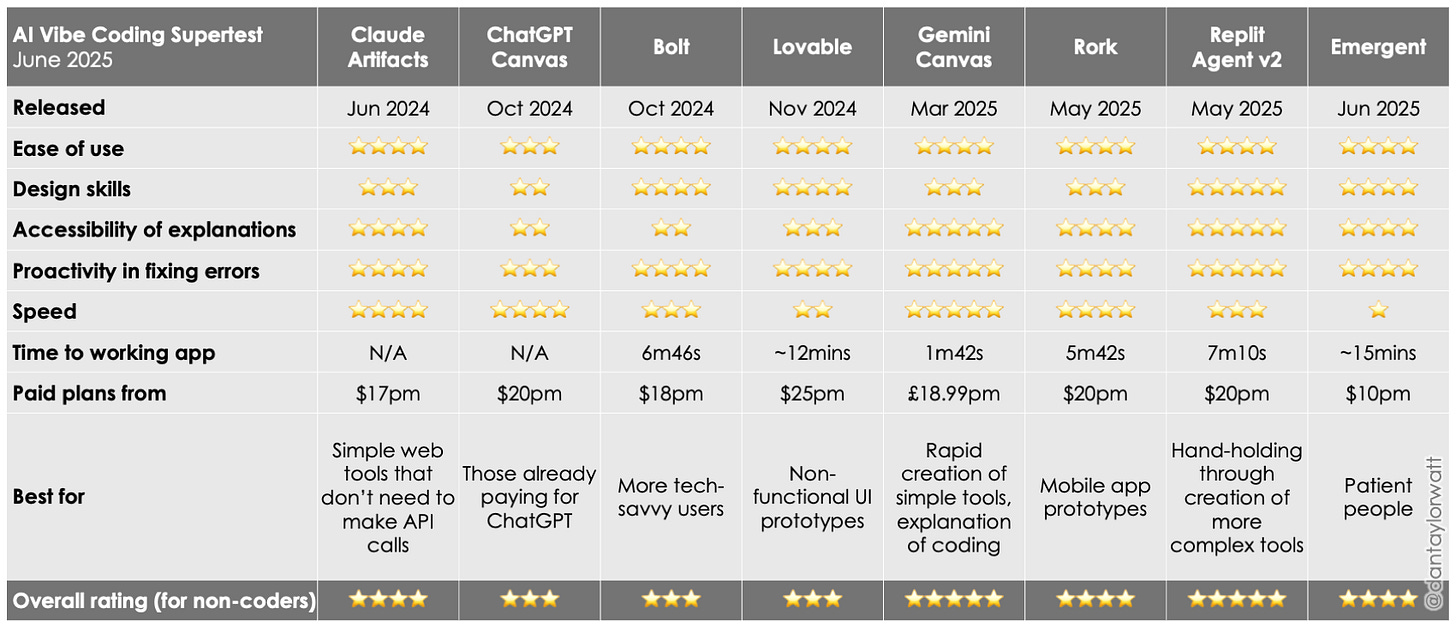

I gave eight of these a road test by asking each of them to create me a tool to generate ALT text for images - a real use-case from one of my clients, who wanted to free up their editorial team to do more creative work.

I asked it to adhere to the BBC’s ALT text guidelines, which are decent and would also test the models’ ability to read and follow guidance spread across several pages.

Here the full prompt I used:

build an app that generates ALT text for images inline with these guidelines: https://www.bbc.co.uk/gel/how-to-write-text-descriptions-alt-text. enable the user to bulk upload images and easily copy the resulting ALT text

It’s a more complicated ask than many of the tools I’ve generated as it requires the use of an AI model with computer vision capabilities to analyse and describe the images.

And here’s how they fared:

Released: June 2024

Claude quickly generated a plausible interface but it didn’t actually generate any ALT text. It fessed up that “The app simulates AI-powered ALT text generation (in a real implementation, you'd integrate with services like OpenAI's Vision API, Google Cloud Vision, or Azure Computer Vision)”. I asked it to “use claude's image capabilities to generate the ALT text” which it said it had done, but it still didn’t work. Claude explained that “Users just need to add their Anthropic API key to get started”. A lot of non-coders would have given up at this point. I persevered, generating an API key and pasting it into a box in the interface. However, it still didn’t work because “browsers block direct API calls to external services like Anthropic due to CORS (Cross-Origin Resource Sharing) restrictions”. I gave up at this point.

I’ve had a lot of success creating tools with Claude but in this particular instance, it didn’t deliver a fully functioning tool.

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐

Accessibility of explanations: ⭐⭐⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐

Speed: ⭐⭐⭐⭐

Time to create fully-functioning app: N/A

Cost: Free with limitations, paid plans start at $17pm

Released: October 2024

It was a similar story with ChatGPT Canvas. It generated “a working React app scaffold” which didn’t actually generate any ALT text. I asked it to use ChatGPT's own computer vision capabilities to create the ALT text. It created me an API endpoint but advised “You'll need to implement this endpoint server-side to handle image processing with GPT-4o”. Reader, I did not.

Whilst neither gave me the working app I was after, Claude did a better job than ChatGPT of explaining and offering to resolve issues.

Ease of use: ⭐⭐⭐

Design skills: ⭐⭐

Accessibility of explanations: ⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐

Speed: ⭐⭐⭐⭐

Time to create fully-functioning app: N/A

Cost: Free with limitations, paid plans start at $20pm

Released: October 2024

Bolt’s first pass looked a lot nicer than Claude’s or ChatGPT’s and didn’t throw any errors. However the generated ALT text once again turned out to be placeholder text. I pointed this out and asked it to use computer vision to generate the ALT text. It asked me to add my “OpenAI API key to the environment variables as VITE_OPENAI_API_KEY”. Not the most intelligible message for a non-coder. When prompted, it did talk me through how to add my OpenAI API key but it still didn’t work. It then deduced it was using a deprecated endpoint and resolved a Terminal error. Then I ran out of free tokens and had to upgrade to a paid plan to see if it could finish the job. After resolving a JSON error and rebuilding my app (having inadvertently replaced it with a generic React component - as you do), I finally had my first fully working ALT text generator! And the descriptions, generated by GPT-4o, were good (e.g. ‘A quirky robot made of vintage television stands against a moss-covered rock, displaying static screens’).

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐⭐

Accessibility of explanations: ⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐

Speed: ⭐⭐⭐

Time to create fully-functioning app: 6m46s

Cost: Limited free tokens, paid plans start at $18pm

Released: November 2024

Guess what? A nice looking app generating more placeholder text (response when challenged: “You're absolutely right! The current implementation just uses random sample descriptions instead of actually analyzing the uploaded images.” Rather than asking for an API key, Lovable tried using “Hugging Face Transformers to run AI models directly in the browser”. That didn’t work so it “updated the vision service to use a more reliable model (Xenova/vit-base-patch16-224) that should work properly in the browser environment”. This did result in some image-specific ALT text although it was slow to generate and of very poor quality (the image showing a robot stuck between a rock and a hard place was assigned the ALT text: “Image showing milk can (70% confidence).” Starting each descriptor with ‘Image showing’ suggests it also wasn’t following the BBC guidelines.

After some prodding it admitted “You're right, the current ALT text descriptions are poor quality because we're using a basic image classification model that only gives single labels like "tiger" or "person" instead of descriptive captions suitable for ALT text.”

It offered to replace the classification model with an image captioning model but the resulting ALT text was still pretty poor (e.g. “A person is standing on a body of water.”)

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐⭐

Accessibility of explanations: ⭐⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐

Speed: ⭐⭐

Time to create fully-functioning app: ~12 mins

Cost: Free for public projects, paid plans start at $25pm

Released: March 2025

After an initial hiccup due to “a syntax error in the JavaScript code where the API URL was being constructed. Specifically, I removed an unnecessary backslash before the opening backtick of the template literal”, Gemini rendered a fully functioning ALT text generator, which output impressive descriptions (e.g. ‘A foot dangles above blue water, its toes just touching the surface and creating ripples’). Its advantage? Being a fully multimodal model (Gemini 2.5 Pro) with built-in computer vision capabilities (so no need for API keys). It also did the best job of explaining the changes it was making and why - increasing its potential as a code learning tool.

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐

Accessibility of explanations: ⭐⭐⭐⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐⭐

Speed: ⭐⭐⭐⭐⭐

Time to create fully-functioning app: 1m42s

Cost: Free with limitations, paid plans start at £18.99pm

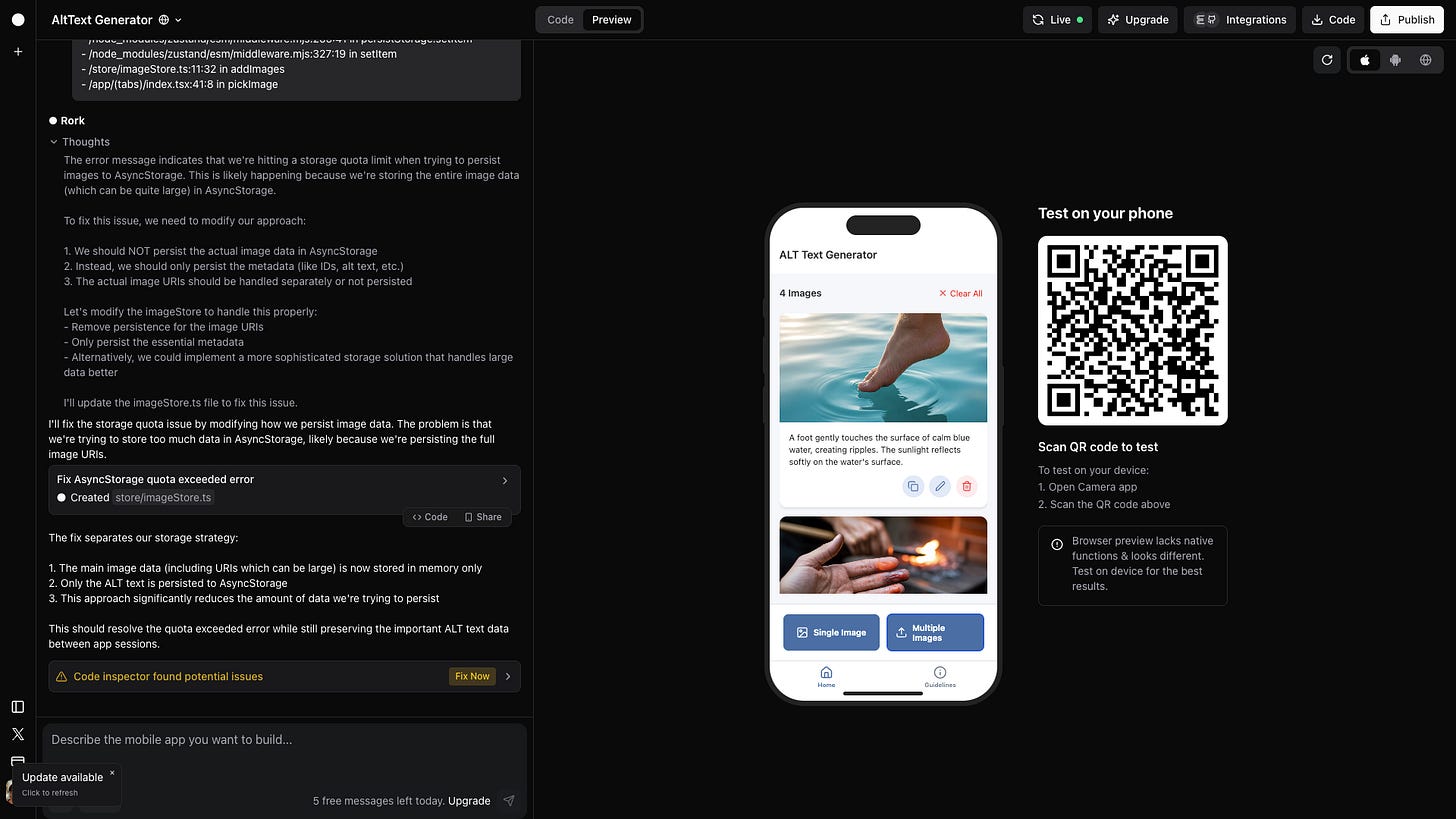

Released: May 2025

Rork is squarely targeting the mobile app market and defaults to creating an iOS app with options to view Android and web versions. It did a decent job of planning the app, presented in ‘Thinking’ blocks (now a familiar design pattern from AI reasoning models). It did encounter a couple of issues but it resolved them and created a simple but fully functional app with excellent ALT text image descriptions which worked both in my browser and on my iPhone (testing the mobile app on a handset requires Expo Go).

Rork doesn’t shy away from showing you the code behind the curtain, which could be off-putting for an non-coder, although I did appreciate how it explained some non-critical errors as “like spelling suggestions in Word”.

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐

Accessibility of explanations: ⭐⭐⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐

Speed: ⭐⭐⭐⭐

Time to create fully-functioning app: 5m42s

Cost: Free with limitations (5 free messages per day), paid plans start at $20pm

Released: May 2025

Replit differed from the other platforms I tested by presenting a plan for me to approve before it dived into coding. It also presented a visual preview whilst it was coding. The coding itself wasn’t the fastest. However, it did proactively identify that I’d need an OpenAI key, clearly explained how to generate one and provided a text box for me to enter it in. It then tested the app before inviting me to upload an image. After resolving a bug with the image previews not displaying, I had another fully functioning ALT text generator, with slightly shorter GPT-4o generated descriptions than Bolt (e.g. ‘A robot made of vintage TVs climbing between mossy rocks.’).

It has a busier interface than the other platforms I tested but effectively signposted important messages. It also added a number of nice features and messaging to the finished app.

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐⭐⭐

Accessibility of explanations: ⭐⭐⭐⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐⭐

Speed: ⭐⭐⭐

Time to create fully-functioning app: 7m10s

Cost: Free to try (5 ‘checkpoints’), paid plans start at $20pm

Released: June 2025

Emergent is currently an invitation-only beta, with a ‘Lite’ version for those whose names aren’t on the list.

After entering my prompt, I had to wait for four minutes whilst it set up a ‘safe environment’ before ‘starting the agent’.

It then created a sensible plan and asked for my input on which AI service I wanted to use for the image analysis - making it clear I would need an API key - and asking my preference on supported image formats and max number of images to process at once.

It then asked me “which specific OpenAI model would you like to use for image analysis” with five options to choose from.

The coding itself was pretty slow (by AI standards!) and the agent encountered a bunch of issues. After 10 minutes it was ready to test. The upload and ALT text generation worked fine, however clicking the ‘Copy’ button generated “Uncaught runtime errors”, which I had to prompt it to resolve.

Ease of use: ⭐⭐⭐⭐

Design skills: ⭐⭐⭐⭐

Accessibility of explanations: ⭐⭐⭐⭐

Proactivity in attempting to fix errors: ⭐⭐⭐⭐

Speed: ⭐

Time to create fully-functioning app: ~15 mins

Cost: Free to try (limited monthly credits), paid plans start at $10pm

Conclusions

As you might expect, the dedicated vibe coding platforms took longer than the general purpose AI assistant (Claude, ChatGPT & Gemini) but tended to produce better-looking, more fully-featured apps.

For this particular challenge, Gemini was the only general purpose AI assistant that created a fully functioning app, although for creating simple tools which don’t require storage or need to make API calls to other services, Claude’s still a good option.

Of the dedicated vibe coding apps, I most liked Replit’s approach to this challenge, clocking the need for an API key without prompting and creating the most fully featured and visually appealing app.

If you’re looking to create a mobile app, I was impressed with Rork.

None of these tools are a substitute for a capable engineering team when it comes to developing secure, stable, scalable consumer products, but they do provide a viable route to creating personal tools and prototypes without a dedicated engineering team.

They also provide a great way for non-coders to start to learn about coding, especially those platforms which take the trouble to explain what they’re doing in easy-to-understand terms.