TV’s generative AI transparency challenge

The last 6 months have seen a growing number of media organisations making public statements about their planned approach to generative AI.

The FT and The Guardian were amongst the first out of the blocks, back in the spring.

And the BBC set out its approach in October.

It’s great to see media organisations doing this and the principles they lay out are generally very sound.

However, one sentence jumped out from the BBC’s principles which makes sense in the abstract but which I believe is likely to be challenging in practice:

“We will be transparent and clear with audiences when Generative AI output features in our content and services.”

Whilst it’s hard to argue with transparency and clarity as goals, achieving them is going to be increasingly difficult as AI permeates the production process in dozens, if not hundreds, of small ways.

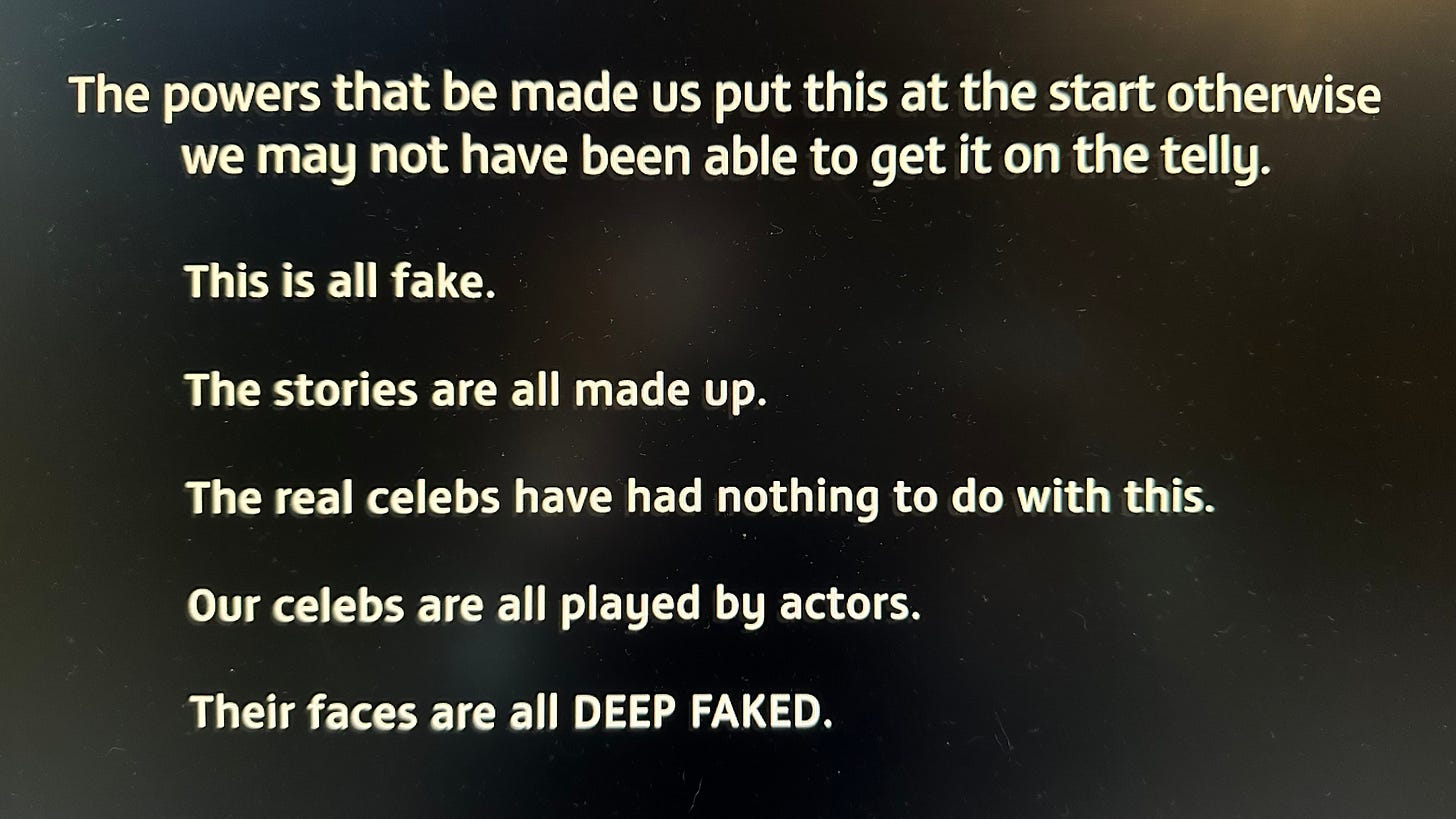

In this early stage of generative AI adoption, it’s tempting to think of the most obvious and visible uses of AI in media, such as ITV’s ‘Deep Fake Neighbour Wars’ (yes, really). Aside from the implicit disclaimer in the title, the producer/broadcaster can put a single interstitial up before the programme which makes the use of deep-faking crystal clear.

However, generative AI will be used in much more subtle and diffuse ways than deep-faking celebrities for laughs.

As a publicly-funded broadcaster, the BBC is quite rightly strict in avoiding undue prominence of commercial products in its programmes (remember Bake Off Smeg-gate?).

Historically, removing commercial branding from an item in shot would have been a manual post-production task. If generative AI were used to achieve the same end in the future, would it need to be declared and if so, how and where?

What about if a script-writer has had a helping hand from an LLM? Or Strictly’s opening credits featured some GAN-generated glitter balls? Or some of the sound effects on the latest Attenborough doc were made by generative AI rather than a Foley artist? Or the virtual backdrop behind Laura Kuennsburg on election night included some AI-generated imagery?

Disclosing the use of AI in TV production is quickly going to become the equivalent of saying “computers were used in the making of this programme”. Unless you’re going to ban producers from using it, thus limiting their ability to realise the associated efficiency/productivity gains that generative AI can bring.

Right now use of generative AI in professional-produced output is a particularly charged topic with undeclared uses being seized upon and assumptions made about the impact on flesh and blood creatives (see House of Earth and Blood’s cover art, Secret Invasion’s opening credits and Loki’s season 2 poster).

In time, I think media providers will come to focus more on outcomes than inputs. The key question won’t be ‘was generative AI used in the creation of this content?’, but rather ‘could the use of generative AI in the creation of this content mislead viewers in a way which is problematic’?

I suspect the BBC’s generative AI principles will, in time, be translated into more detailed guidelines which will honour the spirit of the principle, whilst avoiding making the organisation hostage to fortune for every undeclared use of generative AI.