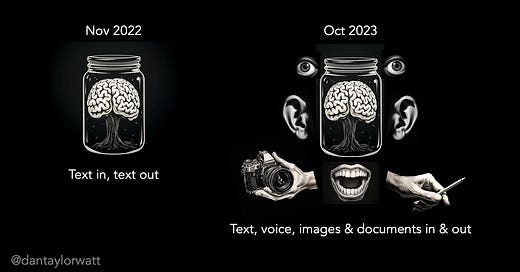

When ChatGPT launched last November, it could deal with just one medium: text.

What we discovered it could achieve with that single medium over the next 10 months was nothing short of extraordinary:

Writing copy

Generating ideas

Summarising

Explaining

Recommending

Proofreading

Redrafting

Translating

Tutoring

Role-playing

Interviewing

Coding

Storytelling

Game playing

The list goes on…

The launch of plugins in the spring opened up a new realm of possibilities, enabling access to up-to-date data sources and introducing document upload.

The rollout of Code Intepreter (since renamed Advanced Data Analytics) in July added data analysis to ChatGPT’s list of capabilities and gave a glimpse into AI’s ability to try different approaches in pursuit of a goal.

Last week, OpenAI began rolling out voice and image capabilities to ChatGPT Plus and Enterprise users*.

Whilst these capabilities aren’t in and of themselves novel, having them integrated into ChatGPT (still the most used AI chatbot by some margin) will make them accessible to many more people and enable the capabilities associated with the different modalities to interact with one another.

The ability to converse with an AI chatbot without touching your keyboard or reading reams of text feels like a nice option to have and will be much more than that for some users with accessibility requirements. However, it doesn’t - in and of itself - open up many new use cases.

By contrast, the integration of image capabilities feels like it does. Only the input side of the image equation is currently widely available* (image generation via DALL-E 3 is gradually rolling out this month).

To give a flavour of the sort of interactions image input enables, here are three image-based interactions I had with ChatGPT today:

1.) Explain this cartoon

ChatGPT has never seen this image before (I drew it after the training data cutoff).

Whilst it’s not been able to identify the logos for Copilot or Cortana (fair enough), it’s identified the Microsoft logo and Clippy and has had a decent stab at guessing the meaning.

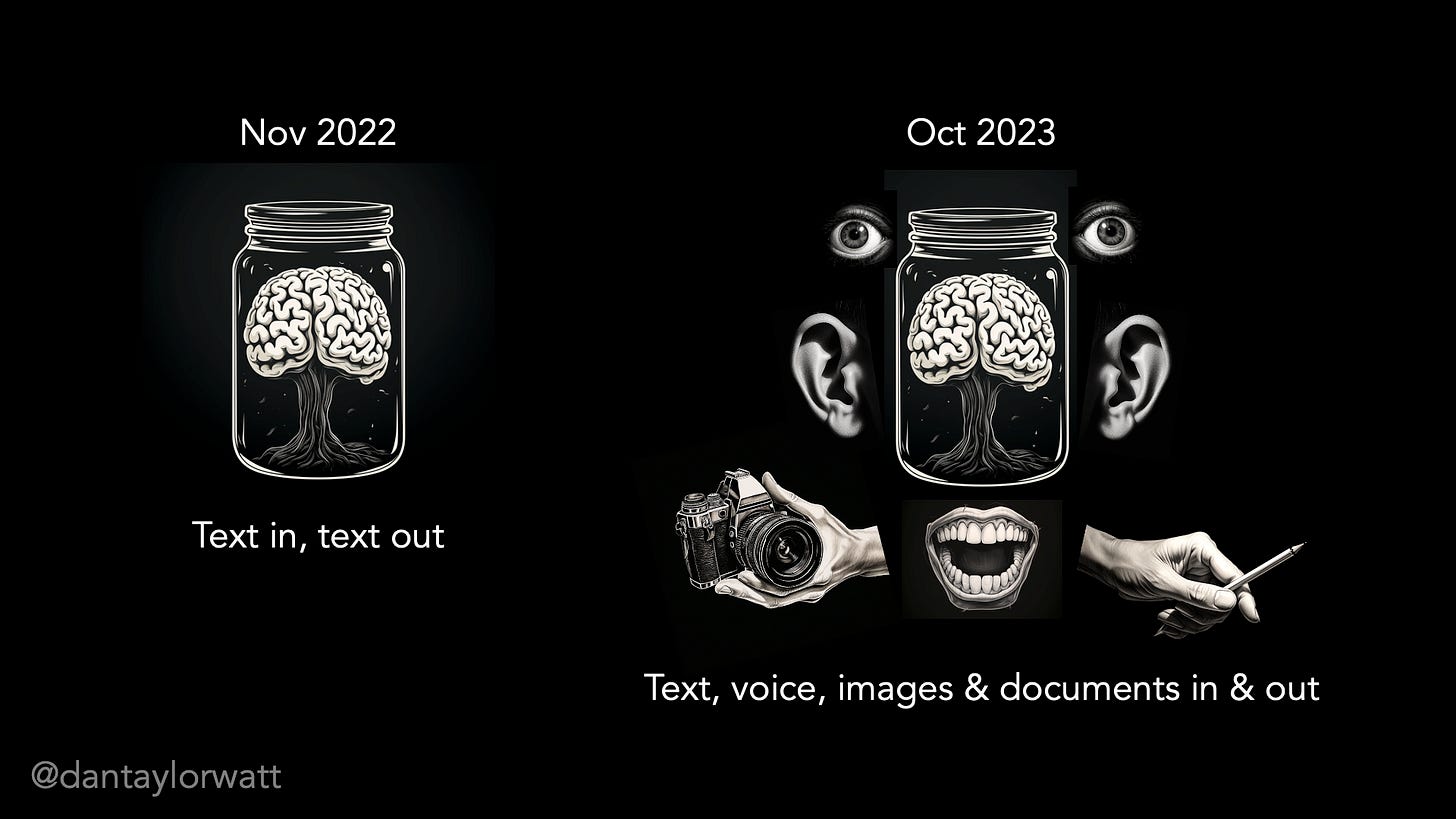

2.) What should I watch?

Here, I’ve given ChatGPT a look at my Netflix home screen and asked it what I should watch. I’ve told it previously (via Custom Instructions) that I like British comedies.

It’s analysed the cover art, checked the associated language and picked out three British comedies.

3.) Where should I post this content?

I wanted to see if ChatGPT could follow a hand-drawn decision tree so uploaded a tongue-in-cheek one I drew a while back.

It appeared to cope with this just fine.

As with text interaction over the last 10 months, it’s going to take time for the manifold use cases image input unlocks to become apparent.

The addition of image generation - and the back and forth it will enable between the different modalities - will multiply the possibilities still further.

So, our AI chatbots now have (metaphorical) eyes, ears and mouths (and arguably some limited-use hands). What next?

The current frontrunner is legs, in the form of devices that can travel around with you (and which aren’t constrained by your pocket or bag), hearing and seeing what you want them to and responding in the appropriate modality for the moment.

There’s already a few early runners in the form of the AI Pin, the Rewind Pendant and Ray-Ban Meta smart glasses although my money is, as usual, on Apple to add this into future generations of their established wearables (AirPods and Apple Watch) ahead of deeming a new device (most likely glasses) viable.

Oh and ‘noses’ are also being worked on…

*Image inputs aren’t yet available on ChatGPT in the UK or EU. If you’re based in the UK or EU and are keen to experiment, Microsoft Bing Chat also has multimodal capabilities. Just select the camera icon underneath ‘Ask me anything…’ to upload an image or the microphone icon to interact verbally.