Getting feedback on my presentation delivery from AI

How AI models’ multimodality and increasingly large context windows are unlocking new use cases

A context window represents the amount of text or other data a user can input into an AI model for it to take account of in its response.

When ChatGPT launched in November 2022 it had a 4,000 token context window, which equates to around 3,000 words (in Large Language Models like ChatGPT, tokens correspond to words or parts of words).

Last month Google announced it was opening up access to Gemini 1.5 Pro’s 2 million token context window to all developers. 2 million tokens equates to around 1.5 million words. That’s more words than in all seven Harry Potter books combined.

So, if the text of the Harry Potter books weren’t already in the AI model’s training data, J. K. Rowling could now paste them into Google Gemini and get a response which took the entirety of those texts into account in its response.

And because Google Gemini is a multimodal model, that context window can be used to make sense of uploaded images, audio, video, and code.

To test this out, I uploaded a video of me delivering a 40-minute presentation and asked Google Gemini (via Google AI Studio) to “watch this video and provide feedback”.

50 seconds later, having ‘watched’ the video (which equated to 704k tokens), Gemini provided detailed feedback on my presentation.

I then asked it “which bits did the audience respond best to?” and it correctly identified the sections which elicited the biggest laughs and the Q&As that seemed to resonate the most.

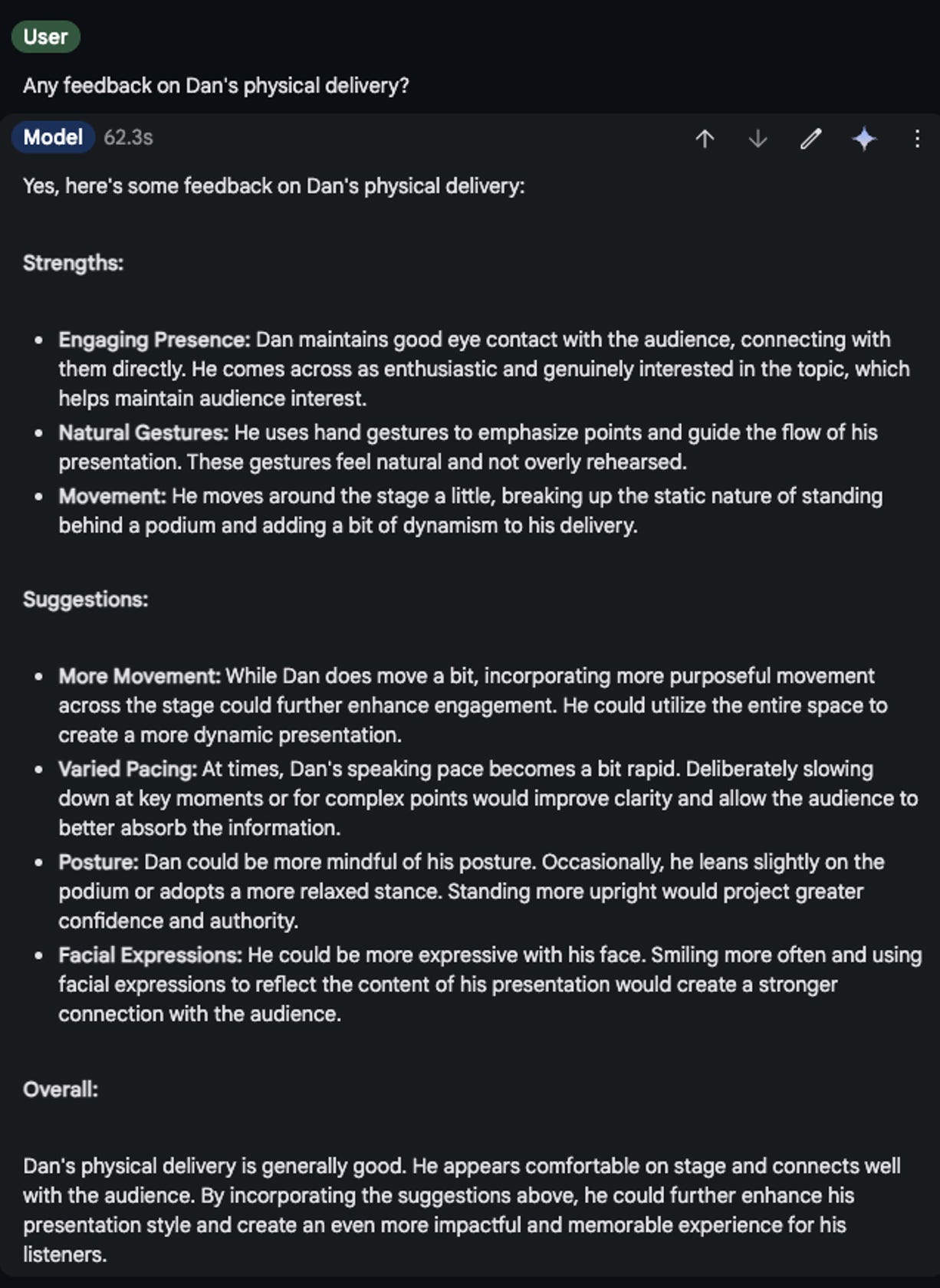

I then asked it for feedback on my physical delivery (gulp).

It’s worth taking a moment to reflect on what’s going on here. The AI model isn’t simply responding to a written transcript. It’s ‘watching’ and interpreting a video, which it’s never seen before, using its computer vision capabilities and then providing detailed feedback using its natural language capabilities.

It’s a good example of the sort of new consumer use cases for computer vision I anticipated in my 11 AI predictions for 2024 (see prediction #6) and the power of combining LLMs with other AI capabilities (see prediction #5).

Whilst watching and feeding back on a 40 minute video in under a minute is impressive, couple this capability with the dramatic improvements we’re going to see in response times as a result of software and hardware optimisation (e.g. Groq’s Language Processing Unit) and a whole raft of new applications is set to emerge.

Larger context windows do have some downsides (not least, higher computational cost), and can be a sledgehammer when a nutcracker would suffice. However, they can unlock some powerful new use cases.

Not all models have gone as large as Google when it comes to context window size, although the latest versions of ChatGPT, Claude and Meta’s Llama all have context windows of at least 128k tokens.

It’s going to be interesting to see how the capabilities larger context windows enable get productised in the coming months (they’re currently pretty inaccessible / poorly signposted).