Most mainstream hosted AI image generators (e.g. Adobe Firefly, DALL-E, Imagen, Midjourney) have safeguards in place to reduce the likelihood of them being used to generate deepfakes.

Open source models such as Stable Diffusion can be run locally, or hosted by less scrupulous companies, without such safeguards.

Last month Black Forest Labs released a new open source image generator, FLUX. A couple of weeks later, Elon Musk’s xAI integrated FLUX with its AI chatbot Grok (which is only available to X subscribers) with far fewer safeguards than other hosted image generators.

This lead to a flood of FLUX-generated deepfake images on X.

The one saving grace of the current FLUX/Grok integration is that you can only generate images from text prompts (not images) so the deepfakes are limited to people with enough profile to have a large number of images tagged with their name in the training data.

Beyond the confines of Grok however, it is possible to get open source models such as FLUX to learn to reproduce someone’s likeness through techniques such as Low-Rank Adaptation (LoRA).

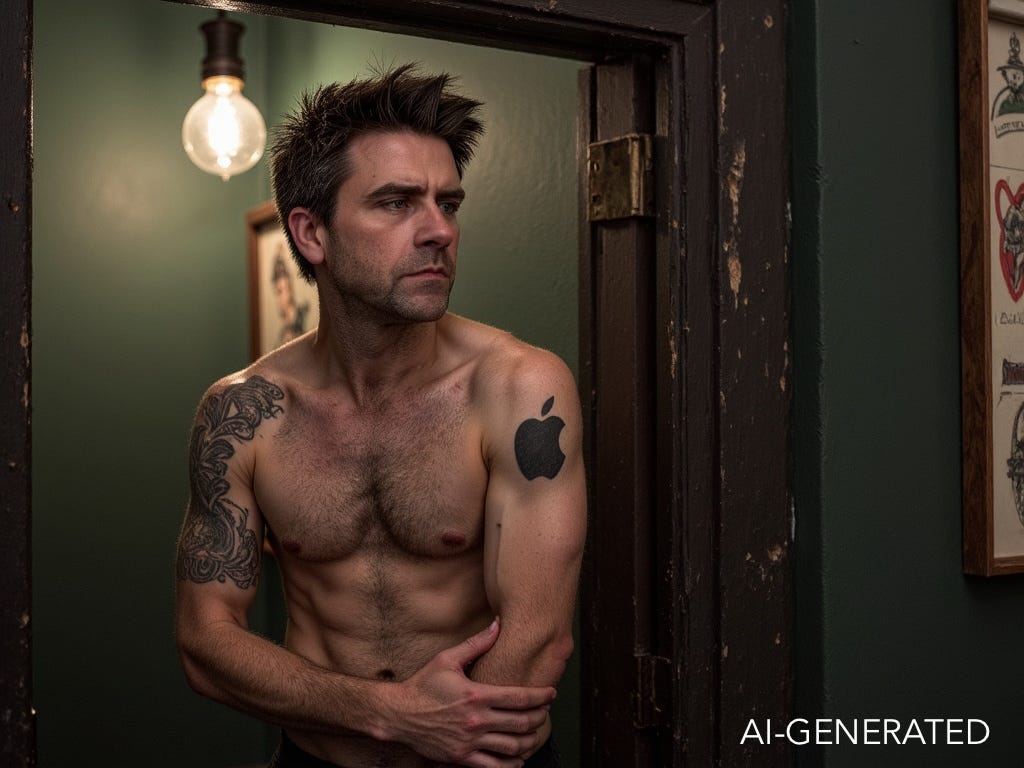

I decided to try deepfaking myself using this approach to see how difficult / time-consuming it would be and what the quality of the output would be like.

I uploaded just 6 selfies, waited ~20 minutes for the model to train itself on those photos then input a range of prompts depicting me in situations I’m unlikely to find myself in. Each took around 6 seconds to generate.

Whilst most of the images have some telltale generated-by-AI indicators when scrutinised closely, the likeness is pretty good in all of them (with the exception of the ripped torso in the tattoo image) and I’m honestly not sure I’d have been able to tell the rollercoaster image wasn’t a photo of me were it not for the exaggerated curvature of the earth.

If you were wondering how easy it now is to create high-quality deep fakes, the answer is very.

Whilst the Online Safety Act has made a start by criminalising the sharing of sexually explicit deepfakes without consent (with criminalisation of their creation in the pipeline), there is plenty of damage that other types of deepfakes can - and will - do and currently no UK or US federal law that prevents the creation or sharing of political deepfakes.

South Korea was ahead of the game in passing legislation in 2020 making it illegal to distribute deepfakes that could “cause harm to public interest” with offenders facing up to five years in prison or fines of up to ₩50,000,000 (~£30,000), although legislation clearly isn’t a silver bullet.

Dan’s Media & AI Sandwich is free to read. However, if you’d like to chip in to support my writing, you can now do so by becoming a paid subscriber. Contributions make it easier for me to dedicate more time to writing. Thanks to those of you who’ve already pledged. If you’d prefer to make a one-off contribution you can do so at buymeacoffee.com/dantaylorwatt.

https://civitai.com/bounties is an open marketplace that until recently featured lots of bounties for deepfake models, still plenty of trademark infringement.

https://civitai.com/search/models?sortBy=models_v9&query=sarah%20andersen shows that CivitAI still happily host both deepfake models of celebrities, AI lawsuit plaintiffs and children.

It's scum like this that the law needs to go after. Platforms and their financiers, not end-users.