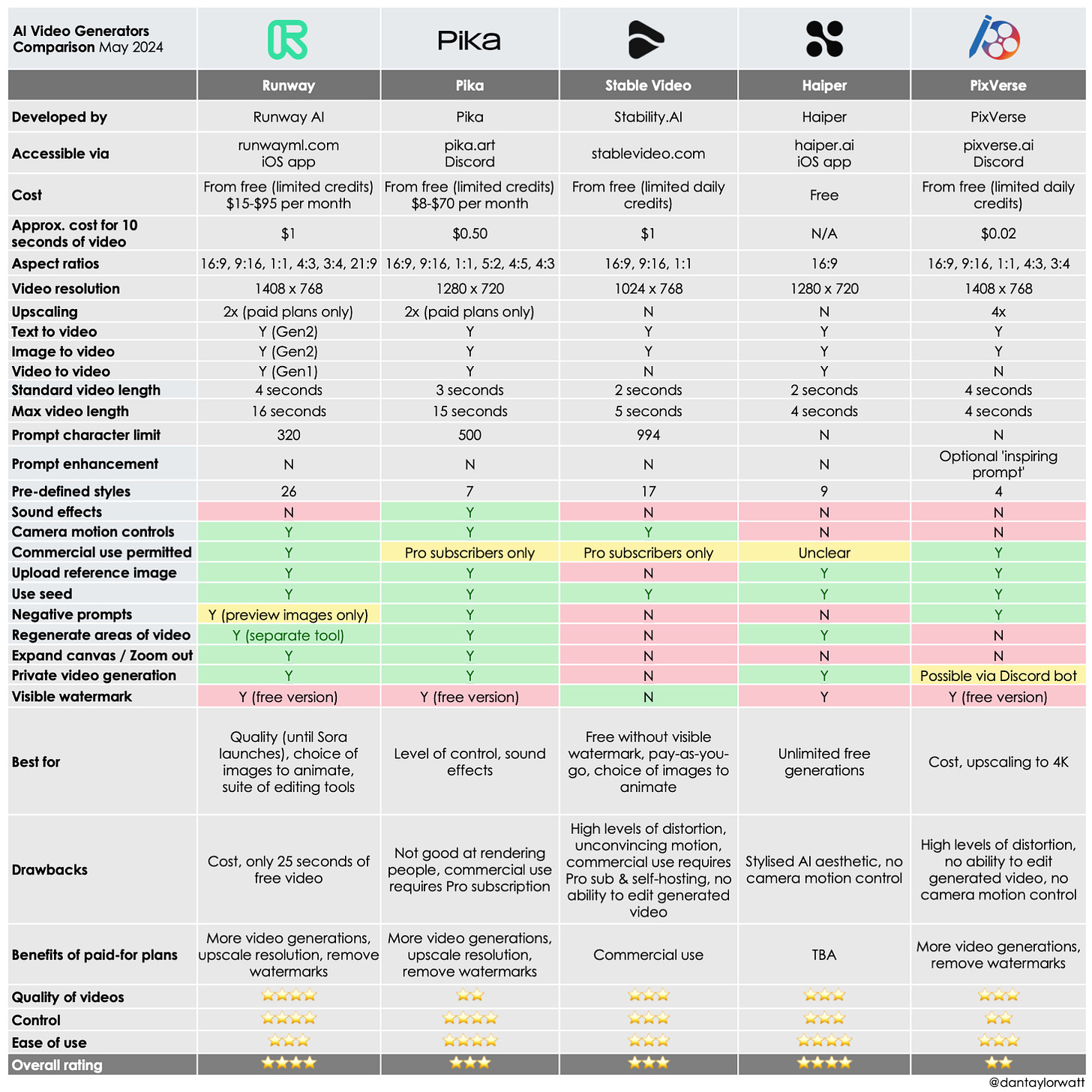

AI Video Generators Comparison

Whilst we patiently wait for Sora’s public release, here’s a look at how some of the leading AI video generators currently stack up against one another.

Runway is currently just out in front when it comes to quality. Generated videos have lower levels of distortion than other models and it makes a better fist of animating people and faces. The ability to generate 4 preview images for free before committing to a video cuts down on wasted generations and it offers a decent range of editing tools. However, it’s not the cheapest. Once you’ve used up your 25 seconds of free video you’re looking at around a dollar for every 10 seconds of video generated. Prompts are also limited to 320 characters, ruling out some of the more descriptive prompts OpenAI used to show off Sora’s capabilities.

Pika works out around half the price (50 cents for 10 seconds) and offers even more control than Runway. However, the quality of the generations is much lower. It particularly struggles to render and animate lifelike people. It also can’t be used commercially without a Pro subscription, which is $58 a month.

Haiper definitely feels like one to watch. Generated videos tend to have a stylised AI aesthetic and there’s currently no option to define camera motion. However, it’s really easy to use and is currently free. I couldn’t ascertain whether generations can be used commercially.

Stable Video is primarily a shop window for Stability’s AI Video Diffusion model. Consequently, less love has gone into the UI and there’s are no tools to edit generated video. However, it does offer a choice of images to animate and is the one model I tested which doesn’t slap a watermark on freely generated videos. However, the videos suffer from high levels of distortion and unconvincing motion. Commercial use requires a Pro subscription and self-hosting.

Bringing up the rear is PixVerse which is cheap as chips (~2 cents for 10 seconds of video!) and offers upscaling to 4K but suffers some of the worst distortion and offers no option to define camera motion or edit videos post generation.

From what we’ve seen of Sora so far, it looks set to blow past the current competition with more photorealistic output, less distortion, better rendering of people and faces, a greater (but by no means perfect) respect for real-world physics, and up to 60 second generations.

The Prompts

Here’s how the five models I tested coped with the same four prompts.

My first prompt (“A young woman walking towards the camera in the rain in London's Piccadilly Circus”) was designed to test rendering and animating a person, specific weather conditions and a well known location. Runway’s woman was anatomically correct if a bit synthetic with low-risk slow-mo animation. It was the most plausible Piccadilly Circus of the five, with a red Routemaster and neon billboards. However, the woman is definitely not walking towards the camera. Stable Video generated the most photographic starting image, but didn’t animate the woman successfully and didn’t invoke Piccadilly Circus. Haiper’s woman was a bit less stable between frames and the background was rainy but not identifiably London. Pika managed rain (with sound effect) and billboards, but didn’t conjure London and totally struck out with woman, who looks and moves like a bad video game avatar. PixVerse rendered a superior video game avatar but with quite a lot of swimming between frames.

My second prompt (“Extreme close up of a 24 year old woman’s eye blinking, standing in Marrakech during magic hour, cinematic film shot in 70mm, depth of field, vivid colors, cinematic”) I borrowed from OpenAI’s Sora demos to see how other models would render one of the most unforgiving of human features in specific lighting conditions. Runway did well on anatomical realism but again felt a bit synthetic and lost focus after a few seconds. Stable Video again generated a fairly photorealistic starting image, with a nod to Morocco, but failed to animate convincingly, with a distracting glitch in one of the eyes. Pika was the least photorealistic and suffered the most swimming between frames. Haiper hit every element of the prompt but suffered a distracting visual glitch with the eyebrow. PixVerse went very quickly to soft focus but definitely couldn’t be mistaken for real footage. I’ve included Sora’s response to this prompt at the end of the below compilation to show the others how it should be done ;)

My third prompt (“A cartoon kangaroo disco dances”) aimed to test the model’s animation skills. Runway created the most appealing cartoon kangaroo but the dancing was very limited and slow motion and it struggled with the kangaroo’s arms blending in to its body. Stable Video started with a plausible cartoon kangaroo and disco but suffered the most extreme distortion. Pika went for a more traditional 2D cartoon drawing but didn’t manage any dancing and struggled with anatomical oddities. Haiper also went 2D with what’s very clearly a kangaroo, but then didn’t manage to persuade it to dance. PixVerse really struggled with this prompt, morphing between various different animals, none of which remotely resemble a kangaroo. This is another one of the prompts OpenAI used to demo Sora so I’ve tacked that video on the end.

My fourth and final prompt (“A can of Heinz baked beans exploding”) challenged the model’s ability to render text, iconic branding and animation involving complex real world physics. Unsurprisingly, they all struggled with this one. Runway did the best job rendering the text and the iconic can, but didn’t manage a convincing bean explosion. Stable Video misspelled the text but still captured the spirit of the logo and created the most realistic shower of baked beans. Pika had all the ingredients (plus a sound effect) but suffered high levels of distortion. Haiper didn’t manage to render the brand, but did an okay job on the explosion (with some bonus pyrotechnics). PixVerse slightly ducked the challenge by not attempting to render the text, cropping to the top of an open can, and animated flames rather than an explosion.

In case you missed them, here are my recent comparisons of AI chatbots and AI image generators.