To date, most AI video generation models have been made available via simple web interfaces which enable the user to input text-prompts and/or reference images and generate a single video clip, which they can then view or download for use elsewhere.

Now products are starting to be released which aim to take care of more of the video production process, beyond generating standalone clips. Some are adding AI video generation to a familiar (to filmmakers) visual timeline-based interface. Others are attempting to make a scene-based script or chatbot the primary interface.

Here are some of the early entrants:

Google Flow

Alongside its latest video generation model, Veo 3, Google recently unveiled Flow, its “AI filmmaking tool built with and for creatives for the next wave of storytelling”.

Accessible to Google AI subscribers, Flow enables users to generate clips using Veo 2 or 3 and then stitch them together using ‘Scenebuilder’. Camera movements (e.g. Dolly up, Jib down) can be prescribed, videos extended and new videos generated from individual frames.

More advanced functionality is reserved for Google AI Ultra subscribers (£234.99pm). ‘Ingredients-to-Video’ enables users to specify elements (e.g. subject, location, object, style) and describe how they want them to be combined.

Flow’s editing capabilities are a far cry from the sophistication of Adobe Premiere Pro or even cheaper online tools such as CapCut, but its integration with Google image and video generation models is going to make Flow appealing for a lot of online creators. And of course it’s only going to get better.

Interestingly, Google has created a dedicated space for viewing other people’s Flow creations, Flow TV, adopting the language and UI conventions of broadcast TV (e.g. channel up/down arrows).

Electric Sheep

Less high profile was the recent launch of Electric Sheep’s eponymous AI-powered video platform, which claims to be “democratizing Hollywood-grade content creation”. Their About page says the CEO “spent a decade behind the cameras on major productions, working on workflows for Disney, Netflix, and Amazon” before a lightbulb moment realisation that “all this Hollywood magic could be automated and democratized”.

The web-based platform is centred around a classic timeline-based editor interface which you can upload media to, but with the option of using leading AI models (including GPT Image and Veo 3) to generate images, video, translate audio and create edits.

The product is clearly a work-in-progress with some interface oddities and a number of features badged as ‘coming soon’, although the accompanying ‘vote for this feature on our roadmap!’ messaging is a nice touch which suggests they are trying to evolve the product with input from users.

Eddie AI

Launched last October, Eddie AI bills itself as “your AI assistant video editor”. The interface is a minimalistic full-screen chatbot, which it unashamedly refers to as offering ‘ChatGPT-style editing’. The primary use cases it foregrounds are organising A-roll and B-roll interview footage, identifying the best bits and creating rough cuts or social media clips. It doesn’t offer AI video generation. Instead, it’s focused on speeding up the editing process and is happy to hand you off to other, more fully-featured tools (Premiere Pro, DaVinci Resolve, Final Cut Pro are all namechecked) for further finessing. It will be interesting to see whether it’s developers start adding more capabilities (it added automatic multicam editing a few months ago) or it gets acquired by a company targeting more of the production tool chain.

Clova

Another new entrant is Clova. Like Eddie AI, it’s focused purely on editing rather than generation and promises to “edit your videos with simple text prompts” delivering “context-aware cuts” with “no editing experience required”. Developed by a team of three (see their viral launch video and follow-up behind the scenes), it’s pretty basic and buggy, but its core functionality is likely to be adopted by bigger players and I suspect its creators will be snapped up by one of the AI labs.

Story Studio

AI storytelling platform Story.com recently added Story Studio (not to be confused with Snap’s video editing app of the same name) which promises “everything you need to turn a simple prompt into a cinematic experience”. The free ‘Limited Preview Mode’ supposedly allows you to generate 15 seconds of video although all I could get its ‘Movie Agent’ (can we stop with the agents already?) to generate was a crude and decidedly uncinematic image with a unrequested voiceover. The upsell to the Premium version (starting at a punchy £100pm) promises use of “OpenAI & Anthropic's latest video models” - odd, when Anthropic hasn’t released a video model 🤷

Of course, not every AI filmmaking tool is trying to clear the bar of ‘Hollywood-grade content creation’. Many are targeting marketers and influencers with platforms focussed on head-shot videos - a narrower domain, although an unforgiving one when it comes to quality.

HeyGen AI Studio

Last month leading AI Avatar generator HeyGen launched AI Studio. Whilst it does still have a video timeline, it foregrounds a scene-based script as the primary editing tool:

“Traditional editors rely on complex timelines. But with HeyGen, you generate your video from a script, no editing knowledge required”

You can type your script, upload audio or record new dialogue for your selected avatar to voice. Scenes can be reordered on a timeline and simple overlays and transitions added.

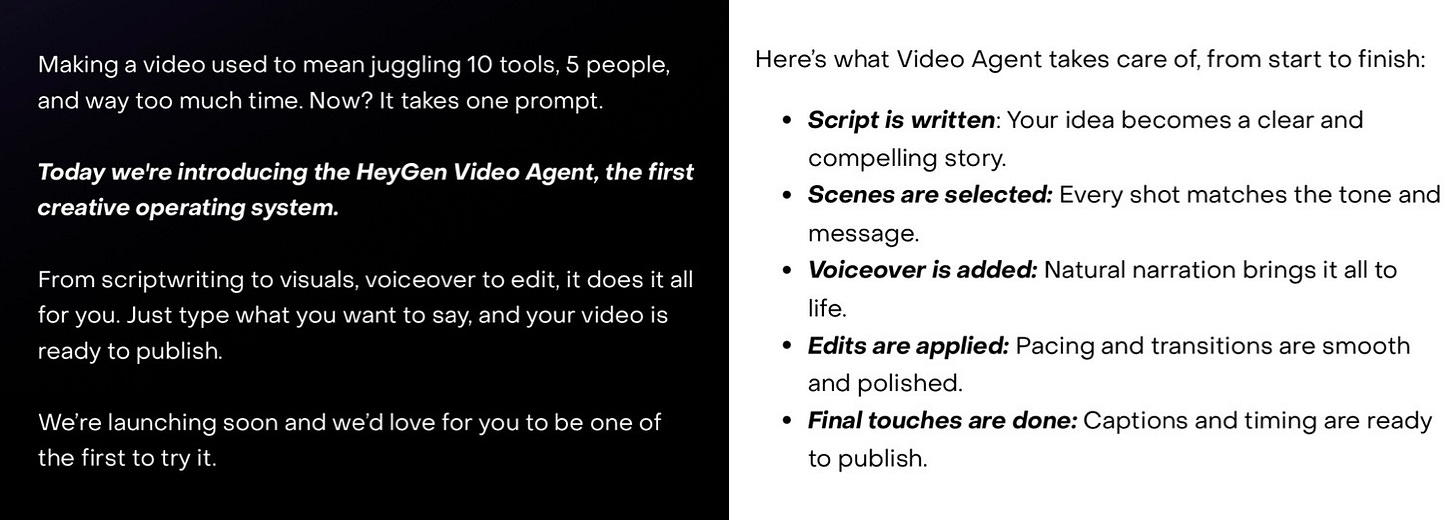

HeyGen has also opened a waitlist for HeyGen Video Agent, which it’s billing as “the world’s first Creative Operating System”. The hyperbolic launch video, posted on the CEO’s X account, tells us “This isn’t an editing tool with AI inside. This is AI that thinks like a creative team and works like a production crew”.

In these fledgling products, we’re seeing a few different approaches to trying to create AI-powered filmmaking software.

1.) Adding AI asset generation to the established visual timeline editor paradigm (see Flow and Electric Sheep).

2.) Encouraging chatbot based interaction and initiative-taking ‘agents’ (see Clova, Eddie AI, Story Studio and Manus video generation)

3.) Foregrounding a scene-based script as the primary editing paradigm (HeyGen AI Studio).

It will be interesting to see to what extent users of different levels of experience adopt these approaches and what new approaches are still to emerge (it’s surely only a matter of time before someone takes advantages of the recent advances in voice AI to make voice-based editing the primary interaction paradigm).

Of course, the current market leaders in filmmaking software aren’t going to go down without a fight. Adobe has been gradually adding AI capabilities into Premiere Pro (e.g. Generative Extend) and has signalled its intention to integrate leading 3rd party image and video generation models alongside its own Firefly.

ByteDance’s CapCut, still a relative newcomer to the filmmaking software party, has been bolder than Adobe in adding AI-powered functionality and has seen monthly active users grow to around half a billion, largely by channeling users from TikTok.

As in so many domains (e.g. search, shopping, document editing), there’s a race on between incumbents and new AI-centric entrants. Can the likes of Adobe, Apple, Blackmagic Design successfully AI-ify their software faster than AI companies can emulate established category functionality?

Video production is a broad category and it’s only going to get broader as AI lowers historical barriers to entry. It’s likely that established players will continue to dominate the top of the market (habit + specialist bells and whistles) but that independent filmmakers, content creators and marketers will increasingly opt for a cheaper challenger option.

*grabs popcorn*